Postman MCP Integration: AI-Powered API Testing for Any Client

Connect Postman with MCP clients (Claude, Copilot, Cursor, IntelliJ). Complete guide for AI-assisted API testing across development environments.

Picture this: You’re deep in API development, juggling between multiple Postman collections, trying to remember which endpoints return what data structure, and constantly switching between your AI coding assistant and Postman for API testing. Sound familiar?

Following my previous breakthrough with MCP MySQL Integration: Build AI-Powered Database Apps, where I discovered how AI could interact directly with databases through Model Context Protocol, I found myself in exactly this situation while working on a complex microservices project. The MySQL integration had shown me the power of giving AI direct access to data sources, but now I needed the same seamless integration for my API development workflow.

I had over 20 different API collections in Postman, each with dozens of endpoints, and I needed AI assistance to analyze response patterns and generate test cases. The constant context switching was killing my productivity—just like it was before I discovered MCP’s transformative potential for database integration.

That’s when the lightbulb moment hit: What if I could give my AI coding assistant direct access to all my Postman collections, letting it analyze, execute, and help me understand my APIs without ever leaving the conversation? Just like I had done with MySQL, but this time for API management across any MCP-compatible client.

Turns out, this isn’t just possible – it’s game-changing. And today, I’m sharing exactly how to build this integration step by step, supporting multiple AI clients including Claude Desktop, GitHub Copilot, Cursor, and IntelliJ.

Why This Integration Matters

In the modern development landscape, APIs are the backbone of virtually every application. Whether you’re building microservices, integrating third-party services, or developing mobile applications, you’re likely managing dozens or even hundreds of API endpoints. Postman has become the go-to tool for API development and testing, but it operates in isolation from your AI workflow.

The Model Context Protocol bridges this gap by allowing AI coding assistants to interact with external tools and services directly. Instead of manually copying API responses or describing your endpoints to your AI assistant, you can give it native access to your entire Postman workspace.

This integration becomes invaluable when you need to:

- Analyze API response patterns across multiple endpoints

- Generate comprehensive test cases for your collections

- Debug complex API workflows

- Document your APIs with AI-powered insights

- Compare different API versions or environments

Understanding Model Context Protocol (MCP)

Before diving into the implementation, let’s understand what makes MCP so powerful. Think of MCP as a standardized way for AI models to interact with external tools and data sources. It’s like giving your AI assistant a set of specialized tools that extend its capabilities beyond text generation.

Unlike traditional API integrations that require complex authentication flows and custom implementations, MCP provides a clean, standardized interface. The protocol handles the communication between AI clients and your external tools, ensuring reliable data exchange and error handling.

What makes MCP particularly elegant is its tool-based approach. Instead of overwhelming AI assistants with raw data, you define specific tools that they can use to interact with your services. This keeps interactions focused and efficient across different AI platforms.

Setting Up Your Postman MCP Server

The heart of our integration is a custom MCP server that acts as a bridge between AI clients and Postman’s API. This server translates AI requests into Postman API calls and formats the responses in a way that AI assistants can understand and work with effectively.

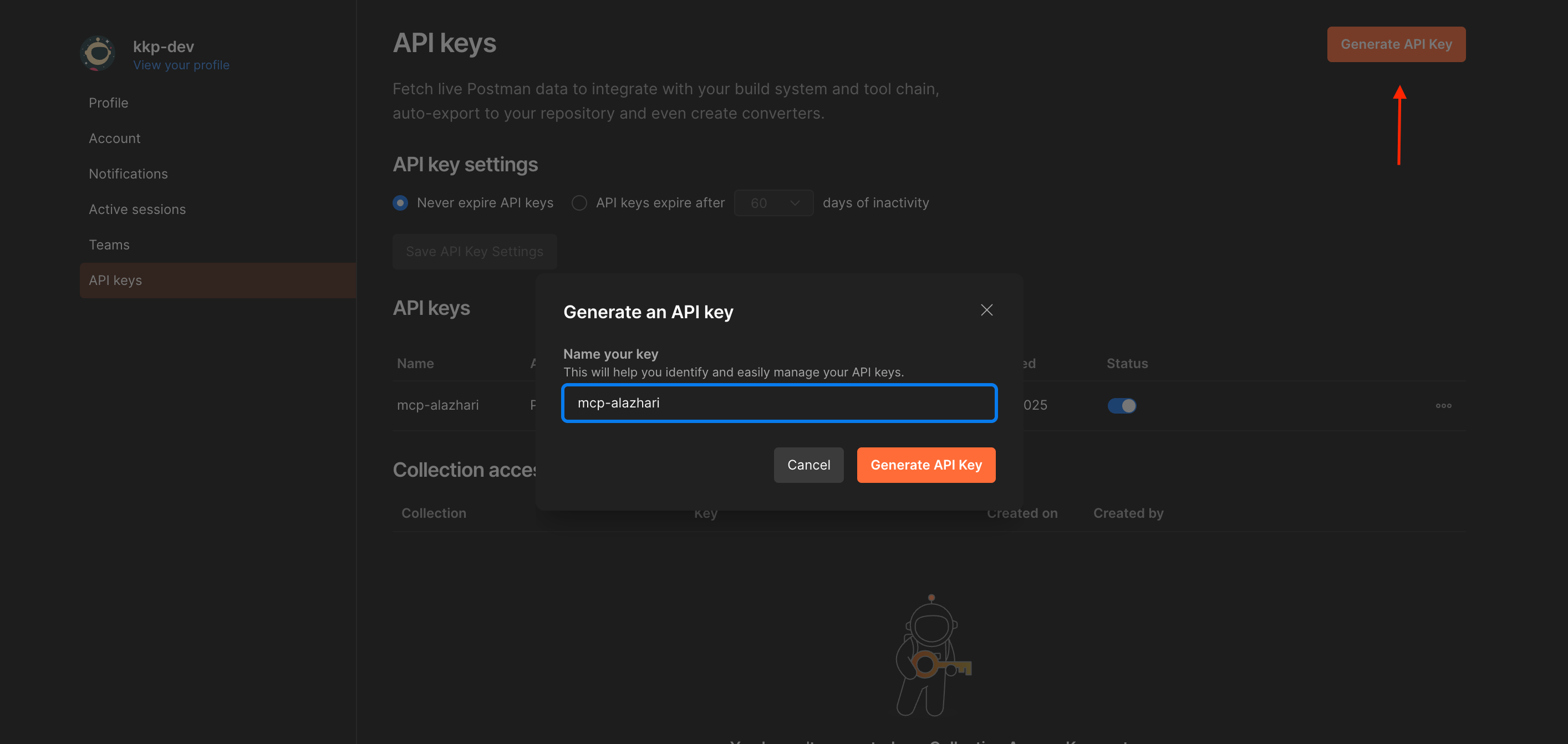

Getting Your Postman API Key

First, you’ll need access to Postman’s API. Navigate to your Postman workspace settings and generate a new API key. This key will give our MCP server access to your collections, environments, and the ability to execute requests.

To create a new API key, go to your Postman account settings, click on “API keys” in the left sidebar, then click “Generate API Key”. Give your key a descriptive name like “mcp-integration” to help you identify its purpose later.

Postman API key generation interface - Creating a dedicated API key for MCP integration with descriptive naming

Postman API key generation interface - Creating a dedicated API key for MCP integration with descriptive naming

Pro tip: Create a dedicated API key specifically for this integration. This makes it easier to manage permissions and revoke access if needed.

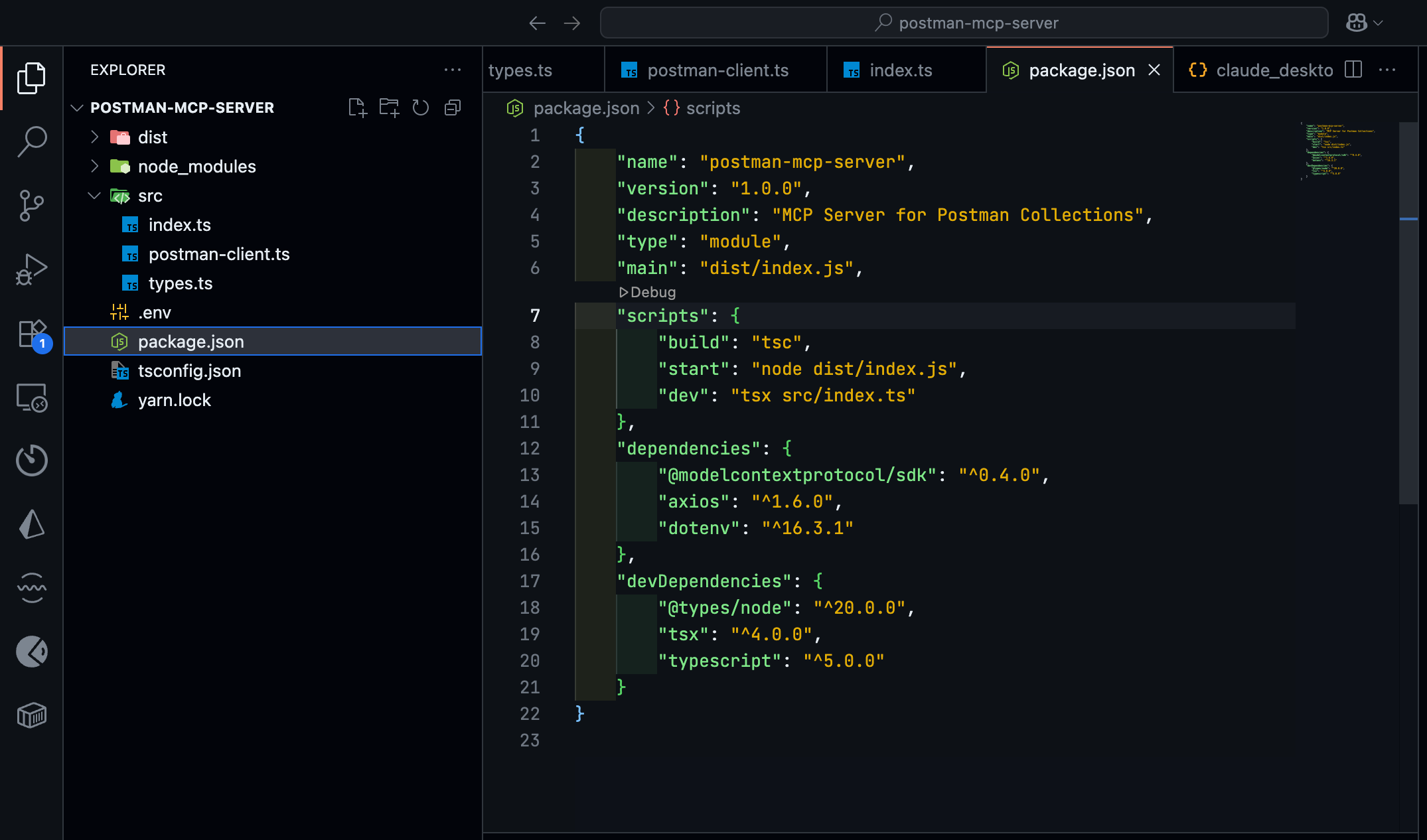

Project Structure and Dependencies

Our MCP server is built with TypeScript and Node.js, leveraging the official MCP SDK. The key dependencies include:

@modelcontextprotocol/sdk- The official MCP SDKaxios- For making HTTP requests to Postman’s APIdotenv- For secure environment variable management

Here’s the essential project structure:

// package.json - Key configuration

{

"name": "postman-mcp-server",

"type": "module", // Critical for ES module compatibility

"dependencies": {

"@modelcontextprotocol/sdk": "^0.4.0",

"axios": "^1.6.0",

"dotenv": "^16.3.1"

}

}The "type": "module" declaration is crucial because the MCP SDK uses ES modules. This was actually a stumbling block during my initial implementation – the server would work perfectly during development but fail when integrated with AI clients due to CommonJS/ES module conflicts.

Complete Postman MCP server project structure in VS Code showing package.json configuration, TypeScript files, and proper ES module setup

Complete Postman MCP server project structure in VS Code showing package.json configuration, TypeScript files, and proper ES module setup

Core Server Implementation

The MCP server defines several tools that AI clients can use to interact with your Postman collections:

const tools = [

{

name: "list_collections",

description: "List all Postman collections",

},

{

name: "get_collection",

description: "Get detailed information about a specific collection",

},

{

name: "list_requests",

description: "List all requests in a collection",

},

{

name: "get_request_details",

description: "Get detailed information about a specific request",

},

];Each tool is designed to provide AI assistants with specific capabilities while maintaining clean separation of concerns. The list_collections tool gives AI clients an overview of your workspace, while get_request_details allows deep inspection of individual API endpoints.

Also Read: MCP with Claude Desktop: Transform Your Development Workflow for the foundational MCP setup and integration patterns.

Handling the ES Module Challenge

One of the most common issues developers face when implementing MCP servers is the ES module compatibility problem. The MCP SDK is built as an ES module, but many developers default to CommonJS configuration, leading to runtime errors.

The solution involves three key configuration changes:

- Package.json: Add

"type": "module" - TypeScript Config: Set

"module": "ES2022"and"target": "ES2022" - Import Statements: Use ES6 import syntax consistently

This configuration ensures that your compiled JavaScript is compatible with the MCP SDK’s ES module requirements. During development with tools like tsx, this might not be apparent, but it becomes critical when AI clients attempt to load your server.

MCP Client Configuration

Integrating your MCP server with AI clients requires updating their respective configuration files. The setup varies depending on your chosen AI development environment:

Claude Desktop Configuration

For Claude Desktop, update the configuration file location based on your operating system:

- macOS:

~/Library/Application Support/Claude/claude_desktop_config.json - Windows:

%APPDATA%\Claude\claude_desktop_config.json

{

"mcpServers": {

"postman": {

"command": "node",

"args": ["/path/to/your/server/dist/index.js"],

"env": {

"POSTMAN_API_KEY": "your_api_key_here"

}

}

}

}Cursor IDE Configuration

Cursor supports MCP through its extension system. Add the MCP server configuration to your workspace settings:

{

"mcp.servers": {

"postman": {

"command": "node",

"args": ["/path/to/your/server/dist/index.js"],

"env": {

"POSTMAN_API_KEY": "your_api_key_here"

}

}

}

}GitHub Copilot Integration

For GitHub Copilot with compatible IDEs, configure the MCP server through the Copilot extension settings or workspace configuration file.

IntelliJ IDEA Setup

IntelliJ-based IDEs can integrate MCP servers through plugins or direct configuration depending on the specific MCP client implementation available.

Security Note: While including the API key in the configuration works for local development, consider using system environment variables for production deployments.

Real-World Usage Scenarios

Once integrated, the possibilities become endless. Here are some practical scenarios where this integration shines:

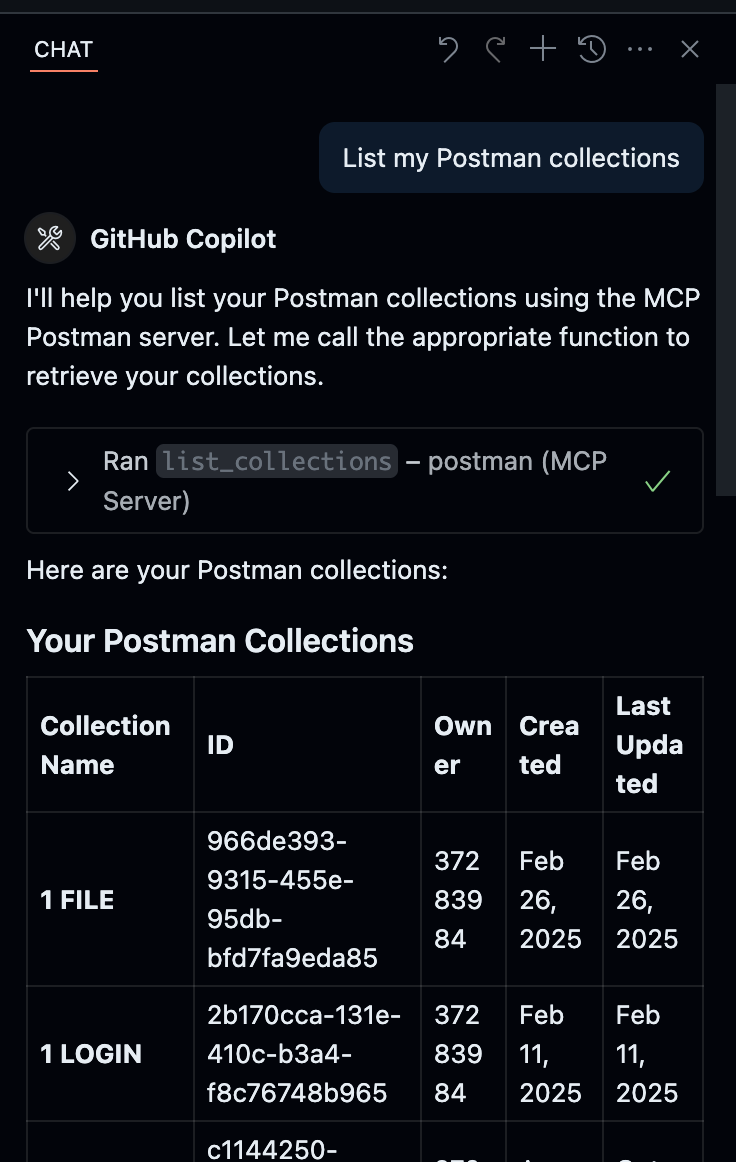

Testing the Integration with GitHub Copilot

Let’s see the integration in action. After setting up the MCP server and configuring it with GitHub Copilot, you can start interacting with your Postman collections directly through your AI assistant.

GitHub Copilot demonstrating successful MCP integration by listing Postman collections, showing collection names, IDs, and metadata in a structured format

GitHub Copilot demonstrating successful MCP integration by listing Postman collections, showing collection names, IDs, and metadata in a structured format

As shown in the screenshot above, GitHub Copilot can seamlessly access and list your Postman collections using the MCP server. The AI assistant understands the structure of your API collections and can provide detailed information about each collection, including IDs, creation dates, and ownership details.

API Documentation Generation

Ask your AI assistant to analyze your collections and generate comprehensive documentation. It can identify common patterns, suggest improvements, and even create OpenAPI specifications based on your existing requests.

Test Case Generation

AI assistants can examine your API endpoints and generate comprehensive test cases, including edge cases you might not have considered. They understand the relationship between different endpoints and can create realistic test scenarios.

Environment Comparison

With access to multiple environments, AI can help you identify discrepancies between development, staging, and production configurations, ensuring consistency across your deployment pipeline.

Also Read: Cursor vs. VS Code vs. Windsurf: Best AI Code Editor in 2025? for choosing the optimal development environment for AI-powered coding.

Troubleshooting Common Issues

During my implementation, I encountered several challenges that are worth sharing:

Path Resolution Problems

Ensure your configuration points to the compiled JavaScript file (dist/index.js), not the source directory. This is a common oversight that leads to “file not found” errors.

API Key Validation

Test your Postman API key independently before integrating it with the MCP server. Invalid keys often produce generic error messages that can be misleading.

Module Loading Errors

If you encounter ES module errors, double-check your TypeScript configuration and ensure all import statements use ES6 syntax.

Performance Considerations

The integration performs well for most use cases, but there are optimization opportunities:

- Caching: Implement response caching for frequently accessed collections

- Pagination: Handle large collections gracefully with pagination

- Rate Limiting: Respect Postman’s API rate limits to avoid throttling

Future Enhancement Possibilities

This integration opens doors to numerous enhancements:

- Real-time Monitoring: Integration with monitoring tools to track API performance

- Automated Testing: Schedule regular collection runs and analyze results

- Version Control: Track changes in your API collections over time

- Team Collaboration: Share insights and findings with team members

Conclusion

Integrating Postman collections with AI clients via MCP transforms how you work with APIs across different development environments. Instead of treating AI and API testing as separate workflows, you create a unified environment where your AI assistant understands your APIs as well as you do, regardless of whether you’re using Claude Desktop, Cursor, GitHub Copilot, or IntelliJ.

The initial setup requires some technical investment, but the productivity gains are immediate and substantial. You’ll find yourself analyzing API patterns, generating test cases, and debugging issues faster than ever before, regardless of your preferred AI development tool.

The Model Context Protocol represents a fundamental shift in how we think about AI integration. Rather than forcing AI to work with limited context, we’re expanding its understanding to include the tools and data that matter most to our work across multiple platforms.

As the MCP ecosystem continues to evolve, integrations like this will become increasingly sophisticated and support more AI clients. The foundation we’ve built here can be extended to include other tools in your development workflow, creating a comprehensive AI-powered development environment that works with your preferred AI assistant.

Start with this Postman integration, and you’ll quickly discover opportunities to connect your AI tools with other services in your stack. The future of AI-assisted development is not just about better models – it’s about better integration with the tools we already use and love, accessible through any MCP-compatible client.